Titles and Abstracts

Titles and Abstracts Keynote Lectures November 28

KEYNOTE LECTURE I: The role of AI and deep learning in Medical Imaging

Professor Paul Suetens,

Head of Division ESAT-Processing Speech and Images, KU Leuven, and Chairman Medical Imaging Research Center, UZ Leuven, Leuven, BE

Computed imaging caused a revolution in medical imaging in the 1970s. One of the consequences is a tsunami of data. Looking back in time we notice a continuous increase in the number of exams and number of publications. The amount of image data puts time pressure on a radiology service. And in that condition the radiologist entered the digital 21st century. New technology, such as artificial Intelligence, big data analytics, neural networks, deep learning, and cloud computing, changes our society and has an impact on many professions. But what is its role in Medical Imaging? Will it have an influence on a radiologist's day? Will it cause a new revolution, comparable to computed imaging in the 1970s? To answer these questions, we should know what the current capabilities and limitations of this technology are.

A common thread through the history of computer vision is learning from data, gradually from shallow to deep learning. But only recently computers are able to surpass human level performance for specific narrowly defined applications. Obviously, commercial products follow, and enter the physician's practice today. It is expected that this will change the workflow in a radiology service in the context of value-based healthcare. However, some caution is also advised. Deep neural networks are still vulnerable and seem to work fundamentally different from humans. Further research is therefore needed to provide solutions that are explainable and reliable.

KEYNOTE LECTURE II: The OnePlanet Research Center – Applying nanoelectronics and AI in food, health, agriculture and environment

Professor Christiaan Van Hoof,

vice president Connected Health Solutions, imec, Leuven, BE

The 21st century is witness to large global challenges related to health, food, sustainability and the environment. While these are formidable challenges, they also represent a gigantic opportunity to improve people's lives on a global scale while at the same time creating new economic opportunities. The OnePlanet Research Center is a multi-disciplinary collaboration in Gelderland between imec, Radboud University & Medical Center, and Wageningen University & Research where nanoelectronics and analytics innovations are used to solve problems related to personalized health, personalized nutrition, sustainable food production and reduced environmental impact. Four open innovation research programs have been defined: Data Driven Nutrition & Health, Citizen Empowerment, Precision Production & Processing, and Tailored Supply Chain. In each program, interdisciplinary teams of researchers and engineers from the three founding partners will conduct application-driven R&D along application and enabling technology (HW/SW) roadmaps. This presentation will give an overview of the vision and activities of the OnePlanet Research Center.

KEYNOTE LECTURE III: Interpretable models for biological network mining

Professor Celine Vens,

Department of Public Health and Primary Care, Kulak Kortrijk Campus, KU Leuven ITEC, KU Leuven – imec, Kortrijk, BE

Networks are omni-present in the biomedical domain: drug-target interaction networks, protein-protein interaction networks and patient-drug response networks are just a few examples. An important task in this domain is to predict whether a link exists between two entities. This task can be modelled as a supervised machine learning problem. Interpretable models such as decision trees can lead to novel biological insights by providing an explanation for the predictions they make. The interaction prediction problem can be transformed into a format that allows to use a standard decision tree learner, however, then one inevitably looses valuable information about the network structure. We present a new decision tree learner that is specifically designed to learn from interaction data. As a side product, the decision tree provides a complete bi-clustering of the data set. We further discuss two extensions that boost the predictive performance: constructing an ensemble of decision trees and combining it with output space reconstruction methods like matrix factorization.

Titles and Abstracts Contributed Talks

Dealing With Sporadic Observations For Critical Care Patient Trajectories

Jaak Simm1, Edward De Brouwer1*, Adam Arany1, Yves Moreau1,

1KU Leuven, Stadius Center for Dynamical Systems, Signal Processing and Data Analytics, Belgium

Keywords: biosignals - medical/clinical engineering

1. Introduction

The analysis of patient trajectories (i.e. the medical history of patients as found in Electronic Health Records (HER)) is receiving increasing attention in the machine learning community. Correctly harnessing this type of data is expected to be crucial for delivering personalized medicine and assessing drug efficacy in real-world environment, among others. However, the modeling of such data is challenging due to its sporadic nature. Signals are sampled irregularly (only observed at medical visits) and not all signals are measured each time (only some measurements are taken at each visit).

In particular, EHR from critical care patients is an archetypal example where a broad range of signals are sporadically collected. What is more, the impact of successful machine learning methods is large in this area of medicine where speed and efficacy are crucial.

In this work, we present GRU-ODE-Bayes [2], a new methodology for dealing with sporadic time series using continuous time modeling and illustrate its performance on a critical care dataset: MIMIC-III [3]. Our model outperforms all state of the art methods for clinical signals forecasting.

2. Materials And Methods

Our approach builds upon the seminal Neural-ODE architecture [1] and can be seen as a filtering method with continuous time multi-dimensional hidden process. Intuitively, patient observations are generated by an unobserved latent process that would represent his health status. Our model aims at learning the non-linear dynamics of this latent process from the available data and integrate it in the future to make accurate predictions.

Our model comprises two main building blocks, namely (1) GRU-ODE, a neural-network parametrized ordinary differential equation that allows us to propagate the hidden process at any time in the future and (2) GRU-Bayes, a network that updates the hidden process as soon as a measurement is observed, mimicking a Bayesian update.

We used the MIMIC-III database and selected a subset of 21,250 critical care patients monitored over 48 hours for 96 different types of physiological signals (suggested to be the most important in the literature). We evaluate our methodology on the forecasting performance of future signals based on a 36 hours observation window. The metric is mean-square-error (MSE).

3. Results And Discussion

We compared our model with 6 other state-of-the-art baselines such as NeuralODE, Sequential-VAE or T-LSTM. Ours reaches a mean square error (MSE) of 0.48, to be compared with the performance of the best baseline, 0.62. A constant model has an MSE of 1.

We further show that our model inherently encodes a continuity prior whose Lipschitz constant can be tuned. This results in increased performance compared to baselines when the number of patients is low (rare disease setup).

References

[1] Chen T.Q. et al., Neural Ordinary Differential Equations, NeurIPS, Montreal, Canada, 2018.

[2] De Brouwer E. et al., GRU-ODE-Bayes: continuous modeling of sporadically observed time series., NeurIPS, Vancouver, Canada, 2019.

[3] Johnson A.E. et al, Mimic-iii, a freely accessible critical care database, Scientific data, 2016.

Towards A Clinical Insight Into ICU Mortality Using Machine Learning

Ahmed Y. A. Amer 1,2, Vranken J. 3,4, Wouters F. 3,4, Mesotten D. 3,4, Vandervoort P. 3,4, Storms V. 3,4, Luca S. 5, Aerts J.-M. 2, Vanrumste B. 1,

1 KU Leuven, E-MEDIA, Campus Group T and STADIUS, ESAT, Belgium.

2 the KU Leuven, M3-BIORES, Department of Biosystems, Leuven, Belgium.

3 Hasselt University, Faculty of Medicine and Life Sciences, Hasselt, Belgium.

4 ZOL, Departments of Anesthesiology, Cardiology and Future Health, Genk, Belgium.

5 Ghent University, Department of Data Analysis and Mathematical Modelling, Ghent, Belgium.

Keywords: medical/clinical engineering – modeling of physiological systems

1. Introduction

Machine learning provides powerful computational capabilities that can help with analyzing complex problems. However, machine learning is mostly introduced as a set of black-box modeling algorithms that do not provide any clear insight into the analyzed problem. Moving from black-box modeling to grey box modeling can be partially obtained by introducing a set of meaningful features to machine learning algorithms. This approach has the potential to obtain clinical insight into the intensive care unit (ICU) mortality prediction problem. In the past, multiple scoring systems have been developed (e.g., APACHE, SAPS) to provide predictions regarding ICU patient mortality. However, these scoring systems are population-based and often use summarized nongranular data. In our study [1], we investigated a set of measured vital signs and mortality events at discharge from the ICU. Our investigation aimed at developing a machine learning based method that allows extracting clinically interpretable features from the data of ICU mortality.

2. Materials And Methods

The data is provided by the intensive care unit and coronary care unit of Ziekenhuis Oost-Limburg (ZOL) Genk, Belgium. The total study population consists of 447 patients with 450 admissions, of which 170 are labeled with ‘mortality’ and 280 with ‘survival’. The observations are collected with a sampling rate of 0.5-1 observation per hour The investigated vital signs are heart and respiration rate, oxygen saturation and blood pressure. The latter comprises systolic, diastolic and mean arterial pressure. Patients are labeled with mortality or survival at discharge. The used machine learning classifier is a linear support vector machines (SVM’s) with maximum error penalizing. This classifier is chosen because of its properties: maximizing the margin between the two classes with minimum tolerance to error and using a linear combination between the features to predict the outcome.

3. Results And Discussion

The investigation starts with extracting statistical features (i.e., minimum, maximum, mean, median, standard deviation, variance, and energy) from all vital signs and their first derivatives. Due to the limited performance, in the next phase also dynamic features are extracted from the different vital signs (i.e., crossing the mean rate and outlier-detection). Based on an in-depth investigation of the deceased patients who are misclassified (false-negative), several physiological observations were noticed. A number of these patients experienced an abnormal behavior with their blood pressure as the pulse pressure was abnormally different, either low or high. Moreover, it is observed that some of the false-negative patients experienced a frequent drop in their oxygen saturation and respiration rate below specific thresholds. Extracting these physiological features together with the previously mentioned features provided an error performance that is remarkably enhanced after being fine-tuned resulted in an accuracy of 91.56%, sensitivity of 90.59%, precision of 86.52% and F1-score of 88.50.

Acknowledgment

This research was funded by a European Union Grant through the Interreg V-A Euregio Meuse-Rhine wearIT4health project. The ICU data is provided by ZOL hospital in Genk/Belgium.

References

[1] YA Amer, Ahmed, et al. "Feature Engineering for ICU Mortality Prediction Based on Hourly to Bi-Hourly Measurements." Applied Sciences 9.17 (2019): 3525.

Supporting Ambient-Intelligent Healthcare Interventions Through Cascading Reasoning

Mathias De Brouwer1*, Pieter Bonte1, Filip De Turck1, Femke Ongenae1,

1 Ghent University – imec, IDLab, Department of Information Technology, Ghent, Belgium

Keywords: medical engineering

1. Introduction

The ultimate ambient-intelligent care rooms of the future in smart hospitals and nursing homes consist of many Internet of Things (IoT) enabled devices. They are equipped with many sensors, which can monitor environmental and body parameters, and detect wearable devices of patients and nurses. In this way, these sensors continuously produce streams of data. Moreover, a smart hospital typically has a set of domain and background knowledge, including information on pathologies, and an electronic profile of patients. This offers the possibility to combine this data with the real-time IoT sensor data streams, in order to derive new knowledge about the environment and the patient’s current condition [1].

Integrating the heterogeneous, voluminous and complex data sources in real-time is a challenging task [1]. However, in healthcare, making decisions is time-critical, and alarming situations should be reacted upon responsively. Resources are limited, and privacy should be considered.

To deal with these challenges, Semantic Web technologies can be deployed [1]. They semantically enrich all data using an ontology, which is a formal semantic model. Semantic reasoning techniques can then reason on this joint data to derive new knowledge. To deal with the existing trade-off between the complexity of this reasoning and the velocity of the IoT data streams, cascading reasoning is an emerging research approach that constructs a processing hierarchy of semantic reasoners. Integrating this technique into a generic platform allows to solve the presented issues in smart healthcare [2].

2. Materials And Methods

The architecture of a generic cascading reasoning framework consists of different types of components [2]. They can be deployed on multiple physical devices across an IoT network, which is deployed in a hospital or nursing home.

A first component type should semantically annotate raw sensor observations to make sure all data is represented in the common ontology. The other two component types are stream processing and semantic reasoning components. To realize the vision of cascading reasoning, they can be dynamically pipelined throughout the IoT network. Stream processing components mainly perform aggregation and filtering of the sensor data, and typically run locally, e.g. in a patient’s room. The semantic reasoning components perform more complex reasoning on the filtered data, and run in the edge of the network or in the cloud. By pipelining them, it is possible to create a cascade of components.

3. Results And Discussion

A prototype of a cascading reasoning framework has already been evaluated on a smart hospital use case, involving the monitoring of a patient with a concussion [2]. This evaluation shows that the total system latency is mostly lower than 5 s, allowing for responsive intervention by a nurse in alarming situations. Hence, it demonstrates how a cascading reasoning platform can solve the existing issues in smart healthcare, while also providing additional advantages such as reduced network traffic and improved local autonomy.

Future research consists of automatically and adaptively distributing a cascading reasoning system in a IoT network, in the most optimal way.

References

[1] Barnaghi, P. et al. (2012). Semantics for the Internet of Things: early progress and back to the future. IJSWIS, 8(1), 1-21.

[2] De Brouwer, M. et al. (2018). Towards a Cascading Reasoning Framework to Support Responsive Ambient-Intelligent Healthcare Interventions. Sensors, 18(10), 3514.

Adaptive Ultrasound Beamforming Through Model-Aware Intelligent Agents

Ben Luijten1*, Frederik J. de Bruijn2, Harold. A.W. Schmeitz2, Massimo Mischi1, Yonina C. Eldar3 and Ruud J.G. van Sloun1,

1 Eindhoven University of Technology, Eindhoven, The Netherlands.

2 Philips Research, Eindhoven, The Netherlands.

3 Weizman Institute of Science, Rehovot, Israel.

Keywords: medical imaging

1. Introduction

Ultrasound imaging is a unique and invaluable diagnostic tool due to its low cost, portability and real-time nature. Today’s ultrasound image reconstruction relies on delay-and-sum (DAS) beamforming, which facilitates real-time imaging due to its low complexity but suffers from poor image quality. While advanced adaptive beam-forming methods, such as minimum variance (MV) significantly improve upon DAS in terms of image quality, their high-complexity hampers real-time implementation.

Over the past years, deep learning has proven itself as a powerful tool for a variety of data processing tasks. Naturally, it has also found application in the field of ultrasound beamforming. While versatile general-purpose network structures such as stacked auto-encoders are commonly proposed, such networks notoriously rely on vast training data to yield robust inference under a wide range of (clinical) conditions. They moreover exhibit a large memory footprint, complicating resource-limited implementations.

Here we propose an efficient model-based deep learning approach directly inspired by adaptive beamforming algorithms [1,2]. Rather than producing the final beamformed signal, the method utilizes a neural network as an intelligent agent in parallel with the beamforming path, computing an optimal set of content-adaptive beamforming parameters. This solution yields a robust and data-efficient adaptive beamformer able to facilitate fast high-quality imaging, while being predictable and interpretable.

2. Materials And Methods

The intelligent agent was implemented as a four-layer fully-connected neural network with sign-and dynamic-range-preserving activation functions, and trained specifically to predict a set of array apodization weights based on time-of-flight (TOF) corrected channel data. These weights are determined such that, when applied to the TOF corrected channel data, the resulting beamformed signal matches a desired high-quality MV beamformed target. We evaluate the network on two different imaging modalities, plane wave (PW) and synthetic aperture (SA).

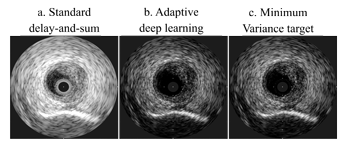

Figure 1. Synthetic Aperture (intravascular) image reconstructions of the three evaluated beamformers

3. Results And Discussion

Our deep learning solution yields a high-contrast reconstruction comparable to the MV target, yet at a fraction of the computational cost, requiring roughly 2-5% of the floating-point-operations needed by MV beamforming. Furthermore, we measured an increased resolution (20% PW, 57% SA) and contrast (5% PW, 26% SA) as compared to conventional delay-and-sum beamforming.

Future research consists of automatically and adaptively distributing a cascading reasoning system in a IoT network, in the most optimal way.

References

[1] Luijten, B. et al., Deep Learning for Fast Adaptive Beamforming. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2019, 1333-1337.

[2] Luijten, B. et al., Adaptive Ultrasound Beamforming using Deep Learning, arXiv: 1909.10342, 2019. [Online]. Available: https://arxiv.org/abs/1909.10342