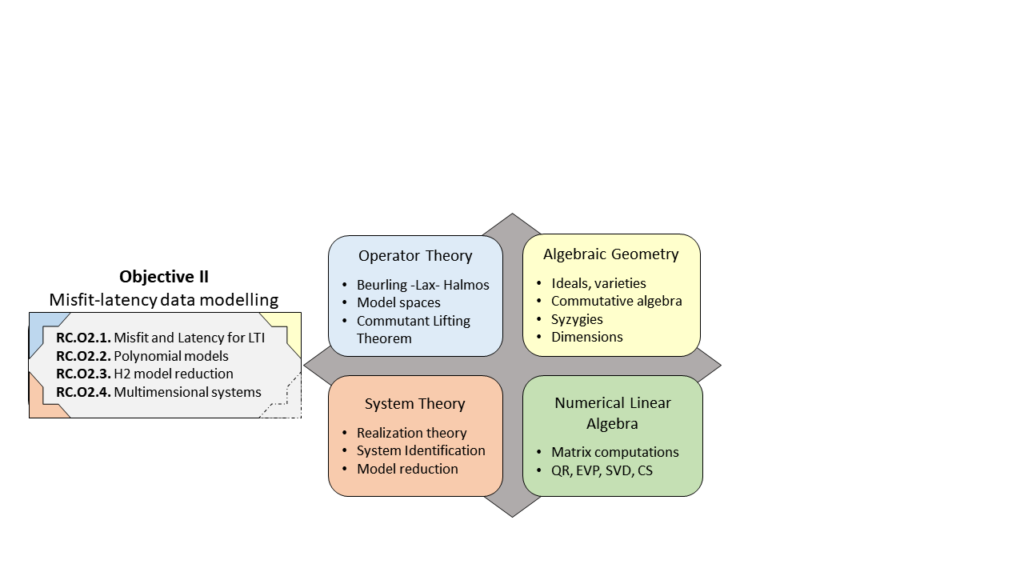

Objective 2: Data Modelling in the misfit-latency framework

In the misfit versus latency framework, we deal with inexact data by

- modifying the data (in least-squares sense) with misfits,

- adding unobservable, latent inputs.

The misfit-latency identification problem is a multivariate polynomial optimization problem. The least-squares objective function contains weighting parameters, whose user-defined values lead to different identification methods, providing a unifying framework for all known (and new) linear time-invariant (LTI) single-input single-output (SISO) identification methods.

We claim that least squares optimal identification for SISO LTI models is basically a (multiparameter) eigenvalue problem.

Challenge 1:

Misfit and latency modelling for LTI models

SISO LTI models

- Show that the globally optimal solution of the polynomial optimization problem follows from a multiparameter eigenvalue problem (MEVP)

- Characterize the optimal model parameters, misfits and latencies

- Shed a new light on the many heuristic identification algorithms that have been considered in the literature

- Solve the least squares optimality of subspace identification algorithms, or modified versions

- Generate user-friendly software for least squares identification

Multiple-input multiple-output (MIMO) LTI models

The main problem with MIMO LTI models is their parametrization, which is a very complicated theory. We intend to use state space models, which are over-parametrized.

- MIMO misfit

- Use norm-preserving isometrically embedded MIMO state space models

- Formulate and solve the misfit problem in higher dimensional lifted spaces and project the result back to the lower dimensional space

- MIMO latency

This boils down to the optimality of subspace methods. - Combining misfit and latency

Challenge 2:

Extensions to polynomial models

- Tackle identification problems for polynomial nonlinear systems

- Use our MEVP approach to verify and generalize recent results about realization theory for rational systems

- Look at neural networks with a multivariate polynomial activation, where least squares training is a multivariate polynomial optimization problem, which should lead to an eigenvalue problem

Challenge 3:

H2 model reduction

We intend to solve this long-standing open problem by proving that the optimizing poles can be found from the solution of an MEVP.

Challenge 4:

Data driven system identification for multi-dimensional shift invariant systems

Exact and least-squares realization of mD autonomous systems

We start from a generalized Hankel matrix containing the outputs of the system and decompose it in an mD observability matrix and a state sequence matrix, from which the model parameters will be derived.

Misfit theory for mD realization

In order to deal with real data, which never satisfy the model equations exactly, we will develop a misfit theory for mD systems.

Data driven mD identification with inputs

- Study the system theoretic properties of partial difference equations

- Identification from exact data

- Least squares misfit minimization using our MEVP approach