Robert M. Corless

Editor-in-Chief, Maple Transactions

Emeritus Distinguished University Professor, Western University, London, Ontario, Canada

Bohemian Matrices: An introduction and some open problems

11 October 2023, 2pm

KU Leuven, Aula Arenbergkasteel (01.07)

Abstract

The name “Bohemian matrices” was proposed for a field that has actually been studied for many years now by different researchers, not recognizing their commonality. The name comes from an acronym for BOunded HEight Matrices, of Integers, although the restriction to integers has been subsequently relaxed. The “height” of a vector is its infinity norm, and the “height” of a matrix A is the infinity norm of vec(A). The word “height” has been commonly used in the context of univariate polynomials expressed in the monomial basis: the height of a polynomial is the infinity norm of the vector of coefficients. We say “characteristic height” for the height of the characteristic polynomial of a matrix A.

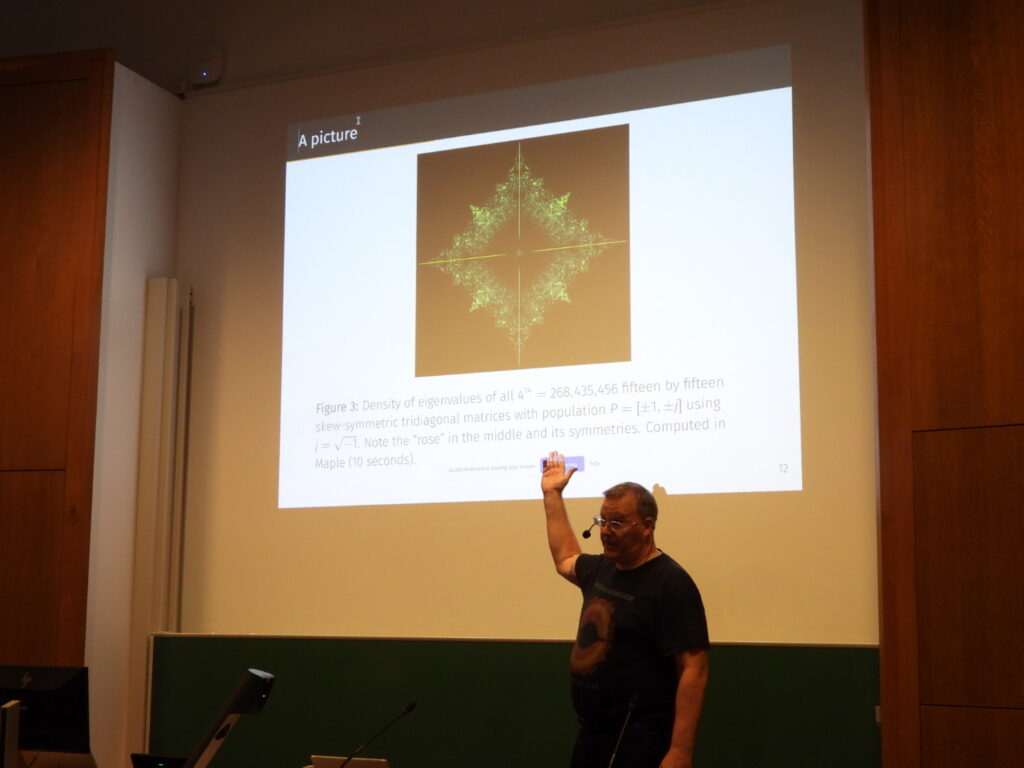

The idea is to restrict the study to families of matrices all of whose entries come from a finite (and hence bounded) set, called the population. Well-known examples include zero-one or binary matrices, Bernoulli matrices whose entries are either 1 or -1, and sign pattern matrices where the entries can be 1, 0, or -1. One can then study Bohemian families of structured matrices, say upper Hessenberg Toeplitz Bohemian matrices, or complex symmetric tridiagonal Bohemian matrices, or symmetric matrices with entries from a restricted population, or Bohemian companion matrices, or other families.

In this talk I will survey some known results, and discuss some problems that remain open. In particular, I will ask for help in finding algorithms to construct “minimal height” companion matrices for polynomials with integer coefficients; such things exist (possibly not uniquely) but there is no known good algorithm for constructing them. I will give examples where the height of the companion matrix is exponentially smaller (in the degree/dimension) than is the characteristic height. This seems interesting because lower height offers the hope of better eigenvalue conditioning. But even that is not well-understood.

This is joint work with many people.